How do you run a HIPAA compliant LLM

There's a lot of foundational work that goes into building a HIPAA compliant application, both regulatory and technical.

As always, it's about 80% paperwork and 20% engineering. And while working with Large Language Models (LLMs) isn't vastly different from traditional machine learning, a few risk areas stand out:

- Processing and storing Personally Identifiable Information (PII) in unstructured formats.

- Dependence on third-party LLM providers.

Building in Safeguards

Here are some architectural patterns we've adopted to address high-risk the more LLM specific vulnerabilities. The main considerations are:

- PII Substitution at LLM Request Time: Substitute PII before sending requests to the LLM to minimize risk.

- Understanding Contracts with LLM Providers: Ensure that contracts with third-party providers clearly define how they handle and protect PII.

A lot of our specific architecture is related to AWS, but the principles and tools are similar across other cloud providers.

Processing & Storing Unstructured PII

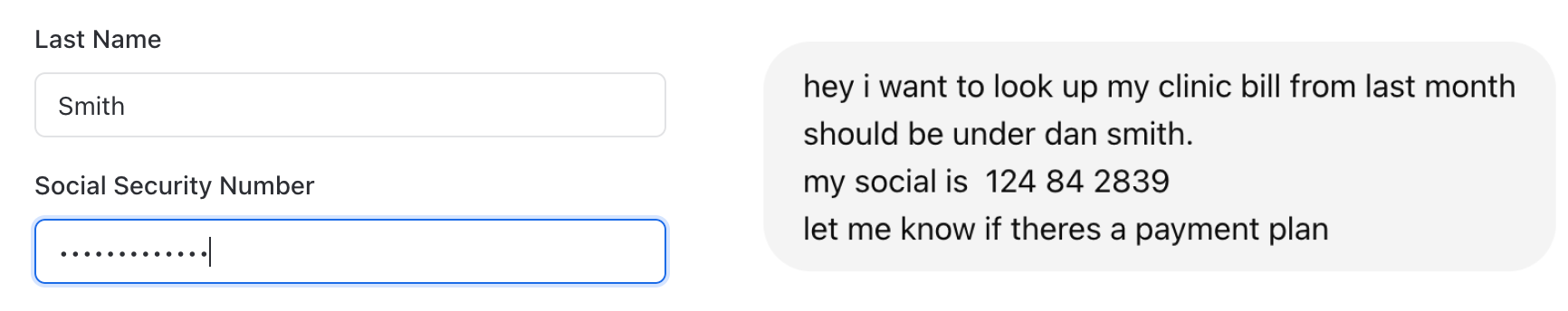

Chat, and increasingly voice, applications are making it very easy to take in and store protected information, potentially without realizing it. An obvious example is taking in data via structured input vs chat messages.

In the first scenario above, it's pretty easy to know that the SSN should be treated as PII, and subsequently stored in an encrypted manner.

The chat scenario however becomes much more difficult. You need to parse each message to identify any PII that is disclosed by the user.

PII Substitution in LLM Prompts

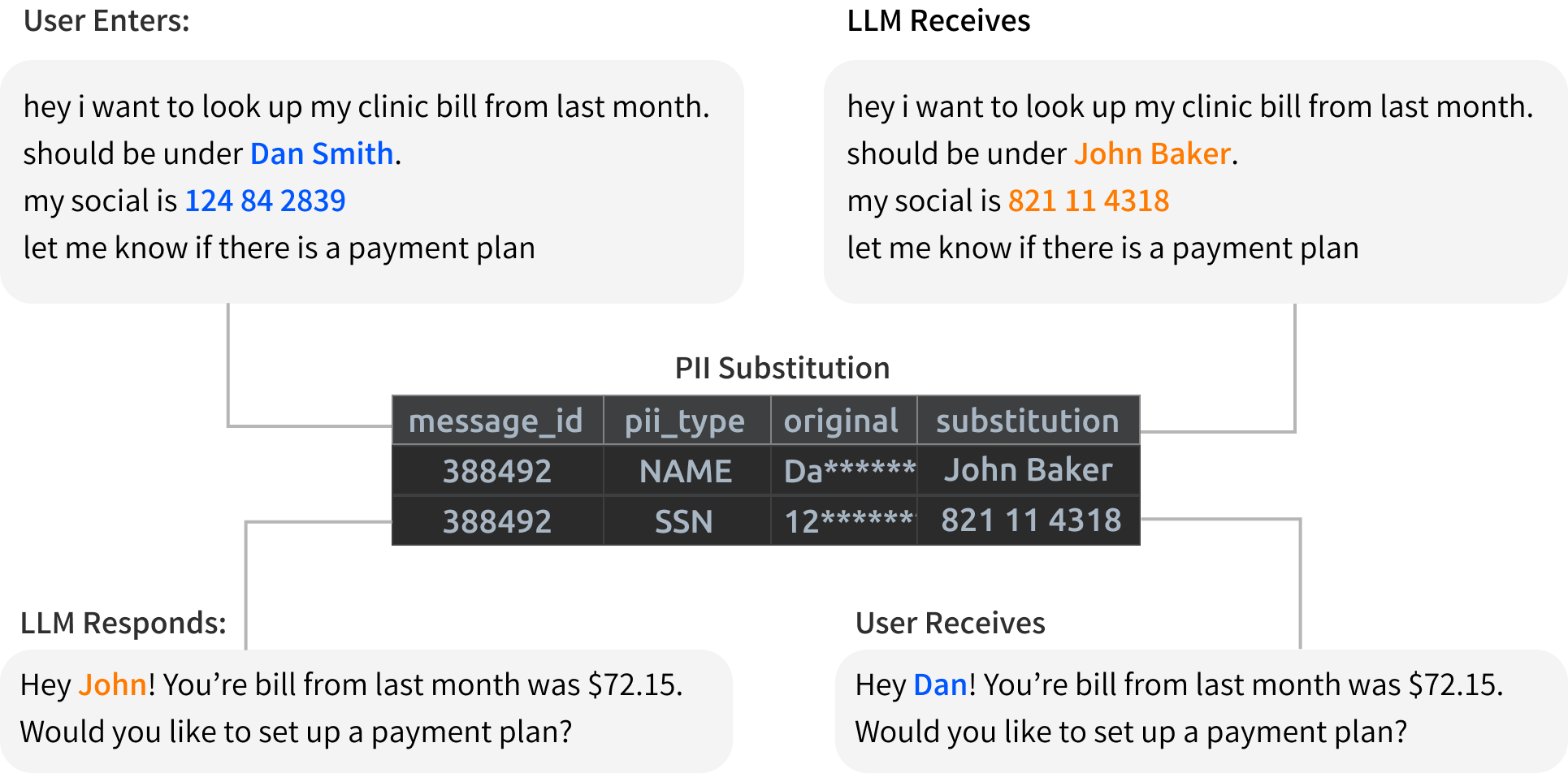

Whether you use a third-party or self-hosted LLM, implementing PII redaction in messages enhances security. However, simple redaction isn't enough for LLM applications since the models need context to function correctly. Replacing a user's name with "**** *****" can lead to unpredictable results.

Instead we create a lookup table that substitutes the PII for a semantically similar value. That means `Dan Smith` is substituted for a randomly generated `John Baker` at query time. And the real user information is stored in a secure fashion.

Using 3rd Party LLM Providers

Deploying a LLM is hard. They’re huge (Llama3 70B is a 40gb model) and require 1000x the compute that a traditional web server might need. Even if models get to half the size, it’s likely most companies stick to dedicated model providers.

Securing agreements with LLM Providers

There is increasing demand for clearly defined terms governing model use and the ownership of prompts and inputs. Especially with stories of OpenAI’s questionable acquisition of training data. To address these concerns, many model providers now offer Business Associate Agreements (BAAs) or Data Processing Agreements.

Despite these offerings, many health tech organizations still prefer to use self-hosted models. This trend is driven by the desire for greater control over data security and compliance with regulatory requirements, ensuring that sensitive health information is handled appropriately and securely within their own infrastructure.

Using OpenAI

OpenAI has recently started offering Business Associate Agreements that likely pass muster under HIPAA requirements. The process is a little opaque (just email baa@openai.com) and the standard copy is not public, so it’s difficult to fully ascertain.

The most common method of HIPAA Compliant OpenAI is to go through the Microsoft Azure managed services which has specific coverage for HIPAA & HITRUST.

Using AWS Bedrock

AWS Bedrock is a service that AWS has published the EULA for each model provided on the Bedrock platform. Note the terms in each of these differ from the official terms of underlying model providers. In the AWS variants, there’s very clear protections around user data.

Here are the legal terms of use for each model class available on AWS Bedrock:

- Anthropic (Anthropic on Bedrock : January 2, 2024)

- Meta (Standard AWS Customer Agreement)

- Mistral (Standard AWS Customer Agreement)

- Amazon Titan (Standard AWS Customer Agreement)

- Cohere (Cohere AI Services : May 23, 2024)

- Stability AI (Stability Amazon Bedrock : May 30, 2023)

The OSS Models (Llama / Mistral) are covered under the standard AWS Customer agreement. While Anthropic, Cohere, and Stability have distinct agreements covering use. Each agreement should be validated independently, but on initial review they each contain language that’s in keeping with most HIPAA compliant agreements.

Excerpts from Anthropic on Bedrock : January 2, 2024 (emphasis ours)

Section B: Customer Content. The technology provided by Anthropic to AWS to enable Customer’s access to the Services does not give Anthropic access to Customer’s AWS instance, including Prompts or Outputs it contains (“Customer Content”). Anthropic does not anticipate obtaining any rights in or access to Customer Content under these Terms. As between the Parties and to the extent permitted by applicable law, Anthropic agrees that Customer owns all Outputs, and disclaims any rights it receives to the Customer Content under these Terms. Subject to Customer’s compliance with these Terms, Anthropic hereby assigns to Customer its right, title and interest (if any) in and to Outputs. Anthropic may not train models on Customer Content from Services. Anthropic receives usage data from AWS to monitor compliance with the AUP (as defined below) and understand Service performance (“Usage Data”).

Usage Data excludes Customer Content. For example, Anthropic receives notifications about the volume of Customer’s usage of the Services.

The main highlights above are

- The explicit exclusion of Customer Content within training data,

- Clarification that “Usage Data” does not include any Customer Content.

Section D.4

Destruction Request. Recipient will destroy Discloser’s Confidential Information promptly upon request, except copies in Recipient’s automated back-up systems, which will remain subject to these obligations of confidentiality while maintained.

Unlike GDPR, HIPAA does not explicitly provide a right for individuals to request data deletion. The primary data destruction requirements within HIPAA are focused on proper disposal mechanisms of (link).